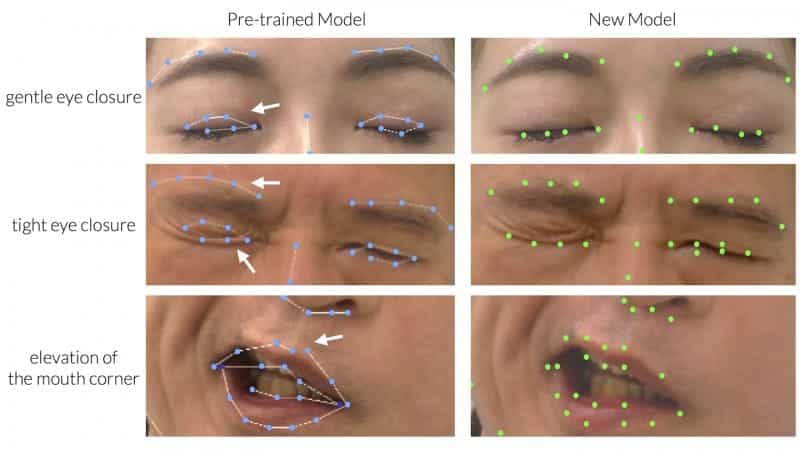

A “fined-tuned” AI approach, including manual annotation of facial keypoints, showed clear improvement in accuracy for automated evaluation of facial palsy.

A “fine-tuned” artificial intelligence (AI) tool shows promise for objective evaluation of patients with facial palsy, reports an experimental study in the June issue of Plastic and Reconstructive Surgery.

“We believe that our research offers valuable insights into the realm of facial palsy evaluation and presents a significant advancement in leveraging AI for clinical applications,” says lead author Takeichiro Kimura, MD, of Kyorin University, Mitaka, Tokyo, in a release.

Refined Tool for Automated Video Analysis of Facial Palsy

Patients with facial palsy have paralysis or partial loss of movement of the face, caused by nerve injury due to tumors, surgery, trauma, or other causes. Detailed assessment is essential for evaluating treatment options, such as nerve transfer surgery, but poses difficult challenges.

Various subjective scoring systems have been developed but have problems with variability. Objective assessments have been described but are impractical for routine clinical use. Machine learning and AI models are a potential approach for routine, objective assessment of facial palsy.

Kimura and colleagues evaluated a previously developed AI facial recognition model, called 3D-FAN, in patients with facial palsy. That system was trained to recognize 68 facial keypoints, such as eyebrows and eyelids, nose and mouth, and facial contours.

When applied to clinical videos, 3D-FAN—trained on images of people with normal facial movement—was clearly insufficient in assessing facial palsy. The system was prone to miss facial asymmetry in facial palsy, including when patients were instructed to smile, and failed to recognize when the eyes were closed.

AI Tool Shows Promise for Objective Ratings of Facial Palsy Severity

Kimura and colleagues attempted to “fine-tune” the model using machine learning, based on 1,181 images from clinical videos of 196 patients with facial palsy. In this process, facial landmarks were manually relocated to the correct position, with steps to minimize variability. Training sessions were then repeated until there was no further improvement in accuracy.

“After machine learning, we found qualitative and quantitative improvement in the detection of facial keypoints by the AI,” Kimura and colleagues write. The refined model showed substantially lower error rates, with improvement in keypoint detection in every area of the face, including the eyelids and mouth—key areas of asymmetry in facial palsy. The article includes illustrations clearly showing the improvement in keypoint detection after machine learning.

The authors believe their “fine-tuning” method—with manual correction of landmarks in a limited number of images—”holds potential for broader applications of making AI-assisted models in other relatively rare disorders.” Pending further evaluation, the researchers plan to make their AI model freely available to other researchers and clinicians.

“Considering our software as one of promising solutions for objective assessment of facial palsy, we are now conducting a multidisciplinary analysis of the effectiveness of this system,” Kimura and coauthors conclude. By providing an objective score, the AI tool may enable more accurate ratings of the severity of facial palsy as well as a quantitative tool for assessing treatment outcomes.

Photo caption: “Fine-tuning” leads to a promising solution for objective assessment of facial paralysis.

Photo credit: Plastic and Reconstructive Surgery